Measurement & mixed states for quantum systems.

Notes on measurement for quantum systems.

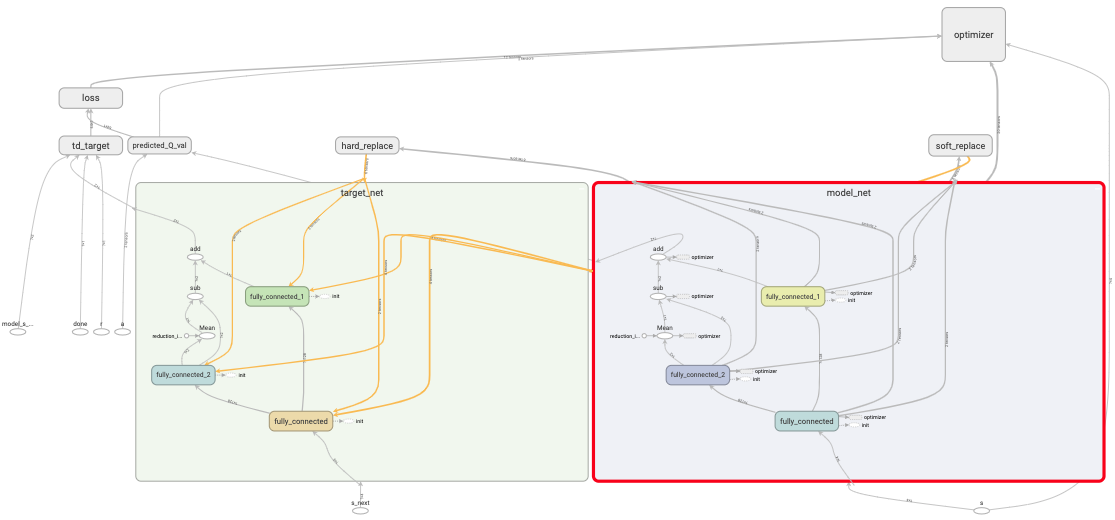

This post documents my implementation of the Dueling Double Deep Q Network (Dueling DDQN) algorithm.

A Dueling Double Deep Q Network (Dueling DDQN) implementation in tensorflow with random experience replay. The code is tested with Gym’s discrete action space environment, CartPole-v0 on Colab.

Code on my Github

If Github is not loading the Jupyter notebook, a known Github issue, click here to view the notebook on Jupyter’s nbviewer.

Network = \(Q_{\theta}\)

Parameter = \(\theta\)

Network Q value = \(Q_{\theta}\) (s, a)

Value function = V(s)

Advantage function = A(s, a)

Parameter from the Advantage function layer = \(\alpha\)

Parameter from the Value function layer = \(\beta\)

(eqn 9) from the original paper (Wang et al., 2015):

Q(s, a; \(\theta\), \(\alpha\), \(\beta\)) = V(s; \(\theta\), \(\beta\)) \(+\) [ A(s, a; \(\theta\), \(\alpha\)) \(-\) \(\frac{1}{|A|}\) \(\sum_{a'}\) A(s, \(a^{'}\); \(\theta\), \(\alpha\)) ]

V represents the value function layer, A represents the Advantage function layer:

# contruct neural network

def built_net(self, var_scope, w_init, b_init, features, num_hidden, num_output):

with tf.variable_scope(var_scope):

feature_layer = tf.contrib.layers.fully_connected(features, num_hidden,

activation_fn = tf.nn.relu,

weights_initializer = w_init,

biases_initializer = b_init)

V = tf.contrib.layers.fully_connected(feature_layer, 1,

activation_fn = None,

weights_initializer = w_init,

biases_initializer = b_init)

A = tf.contrib.layers.fully_connected(feature_layer, num_output,

activation_fn = None,

weights_initializer = w_init,

biases_initializer = b_init)

Q_val = V + (A - tf.reduce_mean(A, reduction_indices=1, keepdims=True)) # refer to eqn 9 from the original paper

return Q_val

Dueling Network Architectures for Deep Reinforcement Learning (Wang et al., 2015)

Notes on measurement for quantum systems.

Notes on quantum states as a generalization of classical probabilities.

The location of ray_results folder in colab when using RLlib &/or tune.

My attempt to implement a water down version of PBT (Population based training) for MARL (Multi-agent reinforcement learning).

Ray (0.8.2) RLlib trainer common config from:

How to calculate dimension of output from a convolution layer?

Changing Google drive directory in Colab.

Notes on the probability for linear regression (Bayesian)

Notes on the math for RNN back propagation through time(BPTT), part 2. The 1st derivative of \(h_t\) with respect to \(h_{t-1}\).

Notes on the math for RNN back propagation through time(BPTT).

Filter rows with same column values in a Pandas dataframe.

Building & testing custom Sagemaker RL container.

Demo setup for simple (reinforcement learning) custom environment in Sagemaker. This example uses Proximal Policy Optimization with Ray (RLlib).

Basic workflow of testing a Django & Postgres web app with Travis (continuous integration) & deployment to Heroku (continuous deployment).

Basic workflow of testing a dockerized Django & Postgres web app with Travis (continuous integration) & deployment to Heroku (continuous deployment).

Introducing a delay to allow proper connection between dockerized Postgres & Django web app in Travis CI.

Creating & seeding a random policy class in RLlib.

A custom MARL (multi-agent reinforcement learning) environment where multiple agents trade against one another in a CDA (continuous double auction).

This post demonstrate how setup & access Tensorflow graphs.

This post demonstrates how to create customized functions to bundle commands in a .bash_profile file on Mac.

This post documents my implementation of the Random Network Distillation (RND) with Proximal Policy Optimization (PPO) algorithm. (continuous version)

This post documents my implementation of the Distributed Proximal Policy Optimization (Distributed PPO or DPPO) algorithm. (Distributed continuous version)

This post documents my implementation of the A3C (Asynchronous Advantage Actor Critic) algorithm (Distributed discrete version).

This post documents my implementation of the A3C (Asynchronous Advantage Actor Critic) algorithm. (multi-threaded continuous version)

This post documents my implementation of the A3C (Asynchronous Advantage Actor Critic) algorithm (discrete). (multi-threaded discrete version)

This post demonstrates how to accumulate gradients with Tensorflow.

This post demonstrates a simple usage example of distributed Tensorflow with Python multiprocessing package.

This post documents my implementation of the N-step Q-values estimation algorithm.

This post demonstrates how to use the Python’s multiprocessing package to achieve parallel data generation.

This post provides a simple usage examples for common Numpy array manipulation.

This post documents my implementation of the Dueling Double Deep Q Network with Priority Experience Replay (Duel DDQN with PER) algorithm.

This post documents my implementation of the Dueling Double Deep Q Network (Dueling DDQN) algorithm.

This post documents my implementation of the Double Deep Q Network (DDQN) algorithm.

This post documents my implementation of the Deep Q Network (DQN) algorithm.